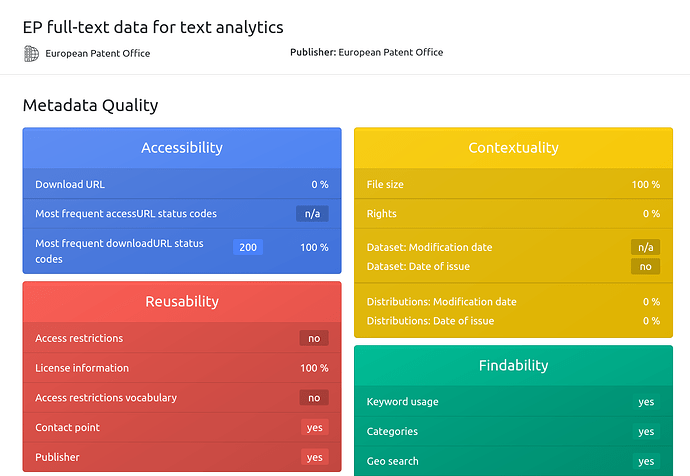

As tweetnounced, the European open data portal just relaunched with a new design. Curious about the changes, I browsed around a bit, finding a Quality button in the menu, next to “Similar Datasets” which leads to:

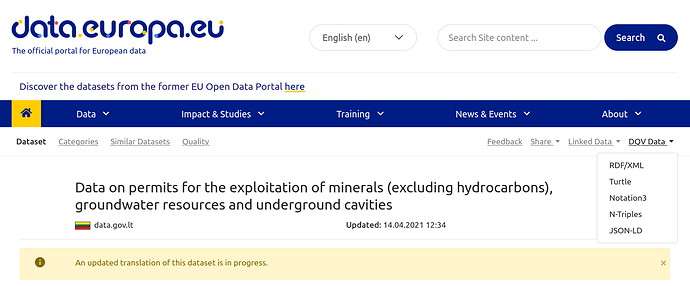

Along with this nice drop-down menu for developers:

At the moment there is an error on some datasets when using this feature, stay tuned …

https://twitter.com/DataEuropa/status/1386692397556457476

The refreshed design really caught my eye - even though I recall some of this functionality being visible in the old portal. According to the release notes it was there since 2019 and the developer button was added in November.

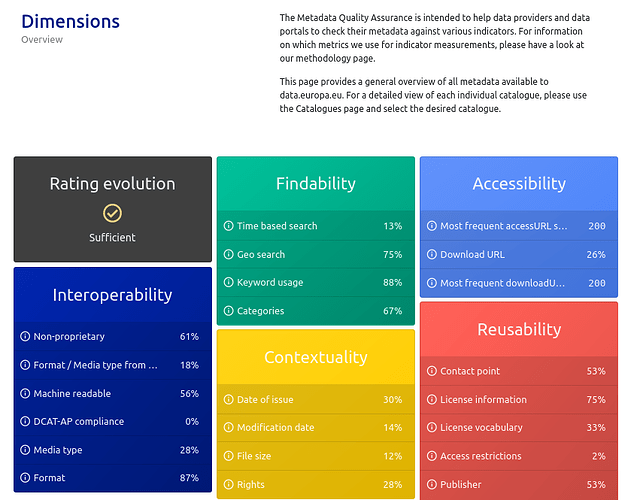

The new Metadata Quality dashboard shown on the home page summarizes the results “interactively” across the site:

(Retweet)

This is based on the W3C’s Data Quality Vocabulary (DQV), a “framework in which the quality of a dataset can be described, whether by the dataset publisher or by a broader community of users. It does not provide a formal, complete definition of quality, rather, it sets out a consistent means by which information can be provided such that a potential user of a dataset can make his/her own judgment about its fitness for purpose”:

The Data Quality Vocabulary (DQV) presented in this document is foreseen as an extension to the DCAT vocabulary [vocab-dcat] to cover the quality of the data, how frequently is it updated, whether it accepts user corrections, persistence commitments etc. When used by publishers, this vocabulary will foster trust in the data amongst developers. This vocabulary does not seek to determine what “quality” means. We believe that quality lies in the eye of the beholder; that there is no objective, ideal definition of it. Some datasets will be judged as low-quality resources by some data consumers, while they will perfectly fit others’ needs. In accordance, we attach a lot of importance to allowing many actors to assess the quality of datasets and publish their annotations, certificates, opinions about a dataset.

DQV support has been part of the v2 release of the CKAN DCAT extension, available for a while. Why are we not seeing this highly useful-sounding instrument on more portals? We can look forward to continued work on the topic on the opendata.swiss side, the building blocks of which can be found in the OGD Handbook.

In the meantime, here is what the data.europa.eu FAQ has to say about it:

The datasets stored in the portal need to be of an appropriate quality in terms of:

- DCAT-AP-compliant mapping

- Available distributions

- Usage of machine-readable distribution formats

- Usage of known open-source licences.

To check the datasets for these quality indicators the Metadata Quality Assurance (MQA), Link opens in a new window tool was developed. The MQA runs as a periodic process in parallel to the harvesting. CKAN and Virtuoso are filled with metadata through the harvesting process. As CKAN cannot store DCAT-AP-formatted datasets directly, the datasets are mapped into a JSON (JavaScript Object Notation) schema that is DCAT-AP compliant. The MQA uses this schema for checking each dataset for its DCAT-AP mapping compliance. If there are any compliance issues detected, for instance if a mandatory field is missing, the dataset is considered as not DCAT-AP compliant.

The MQA presents its results in two views.

- The landing page or ‘Global Dashboard’. This view shows aggregated results for the entire service, i.e. the quality details for all catalogues.

- The second view or ‘Catalogue Dashboard’. This view allows you to select a specific catalogue for which you want to display the quality details.

The current quality indicators include the following:

Distribution statistics:

accessible distributions

error status codes

download URL

existence,

top 20 catalogues with most accessible distributions,

ratio of machine-readable datasets,

most-used distribution formats,

top 20 catalogues mostly using common machine-readable datasets.

Dataset compliance statistics:

top violation occurrences,

compliant datasets,

top 20 catalogues with most DCAT-AP-compliant datasets.

Dataset licence usage:

ratio of known to unknown licences,

most used licences,

top 20 catalogues with most datasets with known licences.

If you want to access this for reuse outside of the portal, you have a plethora of linked data formats to choose from. The W3C keeps a list of DQV compatible tools here.

What kind of metric would you want to see to make an informed decision about “fitness for purpose”?

See also: The hitchhiker’s guide to Swiss Open Government Data