The first MakeZurich in 2017 was awesome. Here is the data:

Stay tuned and get ready to rock your open hardware

The first MakeZurich in 2017 was awesome. Here is the data:

Stay tuned and get ready to rock your open hardware

The count-down is running! We are only 1 week away from kicking-off the second edition of Make Zurich and we’re more than thrilled about what this year will bring. Do you have ideas for the Open challenge? Please add them the the idea pool:

A nice recap by @gnz of this summer’s open networking infrastructure event can be found at thethingsnetwork.org forum.

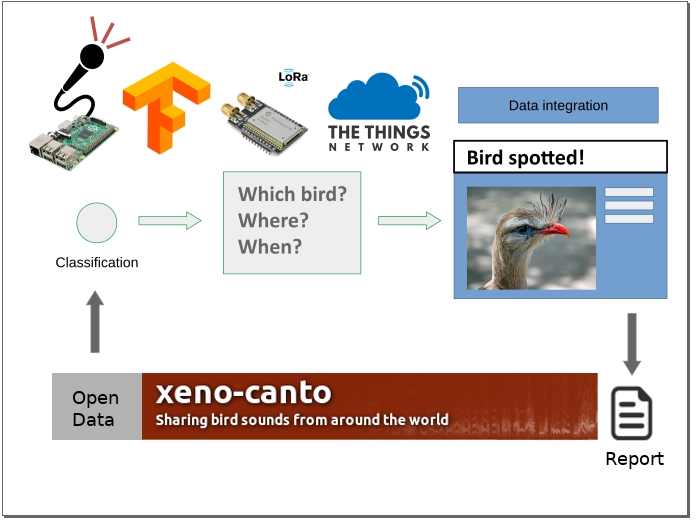

The project I worked on with @afsoonica addresses the City Forest Visitors challenge using sound measurements and machine learning techniques. We have creatively interpreted “Visitors” to also mean animals, initially focusing on birds.

Every living thing in a forest has its sound. To identify creatures, classify and trace them, sound detection has many promising potentials. With a focus on how to stay within or close to the low-power parameters of LoRaWAN, we want to monitor the sounds of the forest over long periods of time or in remote areas.

Unlike smartphone apps, whose data depends on the user’s schedule, the data produced by our sensor could potentially cover the entire day and night time spectrum. An important use case could also be monitoring the interaction between animals and humans. Our dataset could easily encompass bird and human sounds (footsteps, voices, …) to study behavior patterns. An examplery target group would be bird watchers. Bird watching is a popular pastime activity, and several apps exist for detecting and recording bird songs.

The low cost approach facilitated by The Things Network ensures that this project could be reproduced by both scientists, city-planners and amateurs alike. What we tried:

- Did research into the idea, discovering numerous projects and even whole competitions to automatically detect and classify bird song. These led us to datasets, sample code, and pro tips.

- Started putting together a training dataset with crowdsourced (Xeno-Canto) bird song samples from the Zürich region. Download it here

- We use a simple Feedforward Neural Network to identify the species in the forest. Starting work on a training and classification script. Currently the code closely follows GianlucaPaolocci/Sound-classification-on-Raspberry-Pi-with-Tensorflow

- Training Model: We ran the training neural network on our local pc. We use the crowdsourced data as input to train our 3-layer neural network. Once our network is trained, all we need is the network topology and the final set of weights saved on the Raspberry-Pi to do the classification.

- Classification Model: The classification network is run on the Raspberry Pi. We install the Rasperry Pi together with the detection device in the forest. As soon as the microphone detects any sound, it registers it and uses that sound as an input and idetifies the sound. Using TTN, the device then sends and alret to the user, e.g. to the birdwatcher, in real time. the alert contains information about the type of the species, and the time and location of detection.

- We investigated the option of attaching a LoRaWAN antenna directly to Raspberry Pi, but decided to keep an Arduino as part of our hack with which we communicate via USB serial.

Details and links to the source can be found here:

Visit Twitter for more impressions of #makezurich 2018!